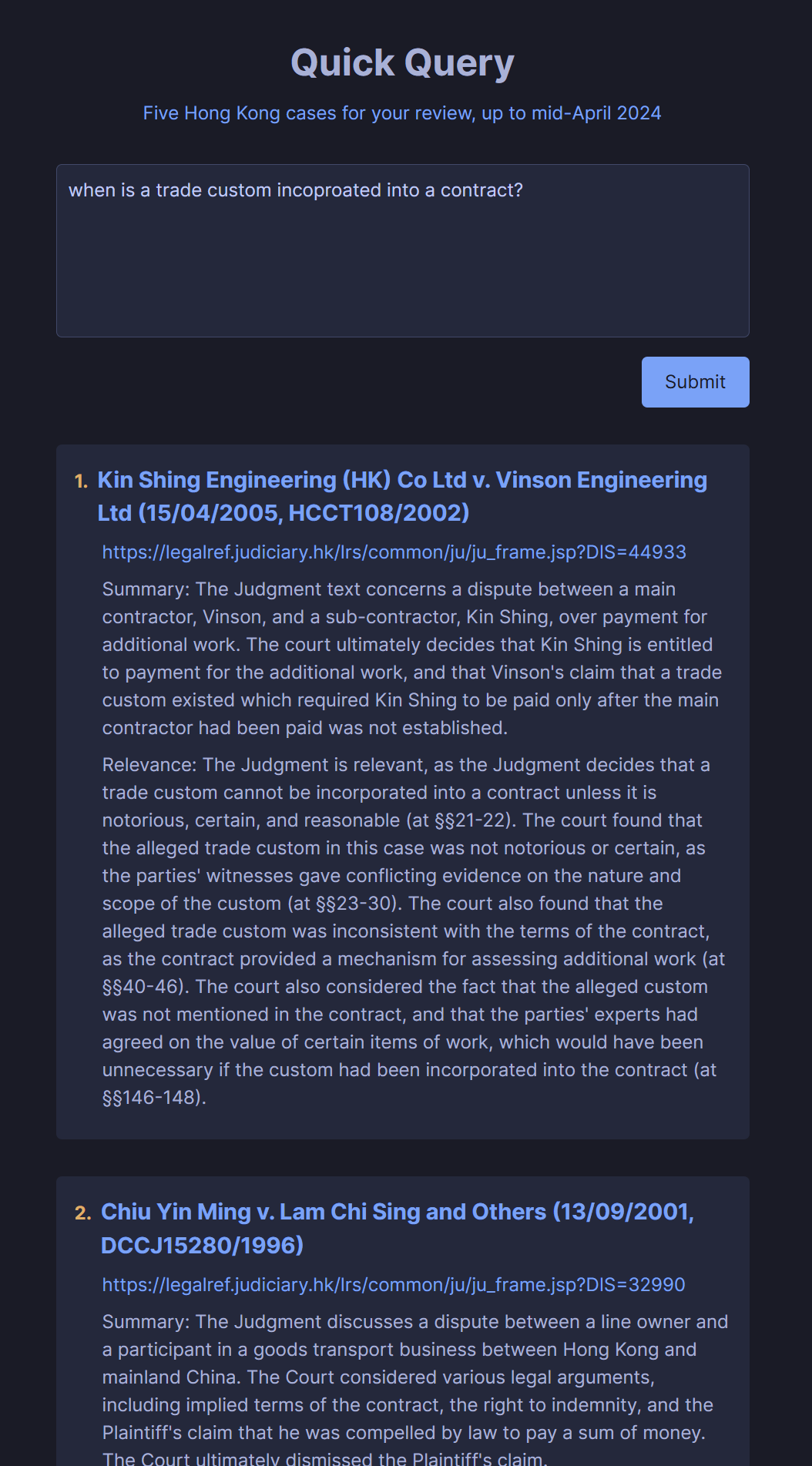

RAG Over Database of Legal Cases

2024, June

Techniques:

- Use cheap llm and long context llm (gemini flash) to summarize each case and add that short summary to metadata. Improves big picture context for retrieval (bm25 + vector search).

- At the last stage of throwing retrieved data into llm for relevance analysis, don't chunk. Put the entire case into the context window. Gemini flash will be able to generate paragraph references reliably enough. For lawyers, paragraph references are essential.

- Use a vector database which stores multiple vectors per document (vespa). Can take the highest average score of all the vectors for an overall score of the document. And can conveniently return the entire document instead of only a chunk.

Inevitable future directions:

- Automate further rounds of search. Will be feasible as models get cheaper and exhibit slightly stronger agentic behaviour. It's already possible, but it will be expensive.

- o1 reasoning style llm to synthesize results. Also currently too expensive what what you'll get.

Real-time Conversational Language Learning

2024, August

Techniques:

- WebRTC, same technology for streaming video conferences, used to stream data packets quickly.

- Pipeline is simply speech-to-text -> large language model -> text-to-speech. WebRTC makes it fast enough for conversation.

- Can interrupt the bot by talking over it.

- Real-time transcription of the conversation. Added pinyin for language learning.

- Web search function call so llm has access to updated information.

Why not just use openai or gemini voice chat:

- The models from big labs are their spokesmen for millions of people around the world, of all ages. They are necessarily boring, like a teacher giving a speech during assembly. Mainstream v underground; corpo v street kid; empire vs rebel alliance.

- Rolling your own voice chat, you can use any llm, including open models which have been finetuned to be less politically correct. Can also customize the transcript.

Jarvis on esp32

2025, February

Techniques:

- esp32, which only activates on the wakeword "Jarvis". It's not always recording.

- Connects to your own websocket server. You can use any speech-to-text, large language model, text-to-speech you choose.

- Websocket is slower than webrtc. For use in Asia, apis in the US tend to be slow.

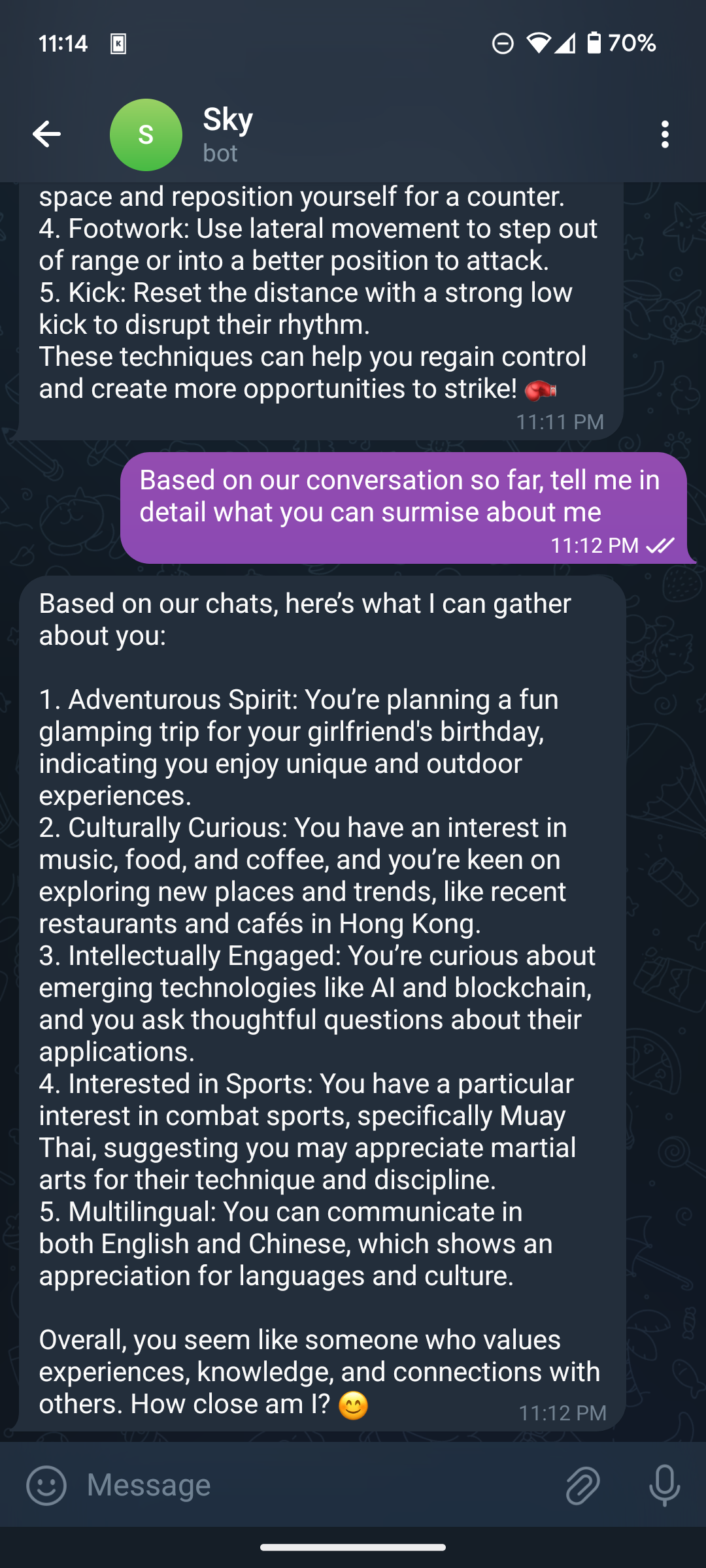

Telegram Bot with memgpt Memory

2024, October

Techniques:

- memgpt paper / letta library implements memory as follows: (1) self-editing the prompt (user section / agent section) for memories which should be frequently accessed; (2) writes long-term archival memory and all conversational history into (vector/)database; and (3) appropriate method of reading and writing memory after each user message handled by llm function calling.

- For prototyping and testing ux, telegram bot free and can be implemented in 5 minutes.

Why not just use openai or gemini assistant:

- Same as for convo bot above. Big lab models = boring.

- As open models became even more reliable with function calling, more complex systems of memory management, like memgpt, can be implemented for production.

Legal Translation in Microsoft Word Plugin

2025, January

Observations:

- For legal translation, one tool will not be enough. Deepl may not be smooth. An llm may not be accurate. There is no knowledge of much of the local legal jargon.

- This combines: deepl for basics + noun extraction and legal glossary search + llm to consolidate.

- Highlight and hotkey is a nice UI.

Voice Typing for Linux

2025, March

Observations:

- Voice typing services exist for macos and windows, but not linux (as of early 2025).

- Runs whisper locally. It is fast and useful enough on cpu (base.en whsiper model).

- Hotkey to start recording, hotkey to stop recording, automatically stored in clipboard and ctrl+v.

- New trend, as of early 2025, of using voice typing to spam an ai with context / questions, e.g. "vibe coding".

Finetuning Llama 3.2 3b for Writing Style Conversion

2024, December Huggingface Model

Download and run in ollama (system prompt below):

ollama run hf.co/iach/judgegguf3

Observations:

- Task: Convert legal English writing from someone with English as a second language to the style of an erudite English Judge (e.g. Lord Denning). It pays to sound posh.

- Simple prompting, even frontier models, tended to make the writing overwrought, too flowery.

- Sonnet 3.5 did will with prompting and few shot examples. But this would be expensive if used frequently.

- Finetuning produced good results. Only a few hundred writing snippets required.

- Llama 3.2 3b would run fast enough on CPU.

- For production finetuning a chatgpt model or equivalent is recommended. Or at minimum a 7b local model.

- In early 2025, Zed (IDE) shipped a finetuned qwen-2.5-coder 7b model for autocomplete. Small models aren't only toys.

Sample:

System prompt:

"You will be given a piece of legal writing by the user. You will output a piece of improved legal writing. Keep exactly the same meaning, but correct any errors and infelicities, and edit it to be in the exact style of Lord Denning (the renowned English Judge). ONLY output the improved legal writing in the style of Lord Denning, and nothing else. Do not give any explanation; do not add markdown or formatting; do not add new lines."

Original text (English as second language):

"The defendant does not have enough evidence, and his argument is not very good. He can't win because he cannot show that he and his business partner had an oral agreement for him not to pay his business partner until the PRC joint venture was profitable. It just seems unlikely, because this event is not certain. It may or may not happen. Many cases say inherent probabilities are relevant when thinking about credibility."

Llama 3.2 3b (not finetuned, too flowery):

"The defendant's case hinges on a crucial issue of credibility, which appears to be in tatters. The burden of proof rests squarely upon him to establish with reasonable certainty that an oral agreement existed between himself and his business partner, prohibiting the payment of dividends to the latter until the PRC joint venture commenced operations. Unfortunately for the defendant, this claim strains credulity on several counts, particularly given the inherent uncertainties surrounding the realisation of such a venture. The fact that numerous cases have endorsed the relevance of inherent probabilities in assessing credibility lends weight to the proposition that the defendant's case is, at best, highly speculative and unlikely to succeed."

Llama 3.2 3b (finetuned, closer to a "learned" legal style):

"The defendant has insufficient evidence and a somewhat unconvincing argument. He cannot succeed because he has failed to prove that he and his partner entered into an oral agreement for him not to pay his business partner until the P.R.C. joint venture was profitable. It seems improbable, because this event is in doubt. It may or it may not occur. Many cases have held that inherent probabilities are relevant when assessing credibility."